A video using artificial intelligence to mimic Vice President Kamala Harris’s voice has sparked significant concern about the potential for AI to spread misinformation, especially with the upcoming Election Day just three months away.

The video, shared by tech billionaire Elon Musk on his social media platform X, was not initially labeled as a parody, causing widespread confusion and debate.

The Controversial Video

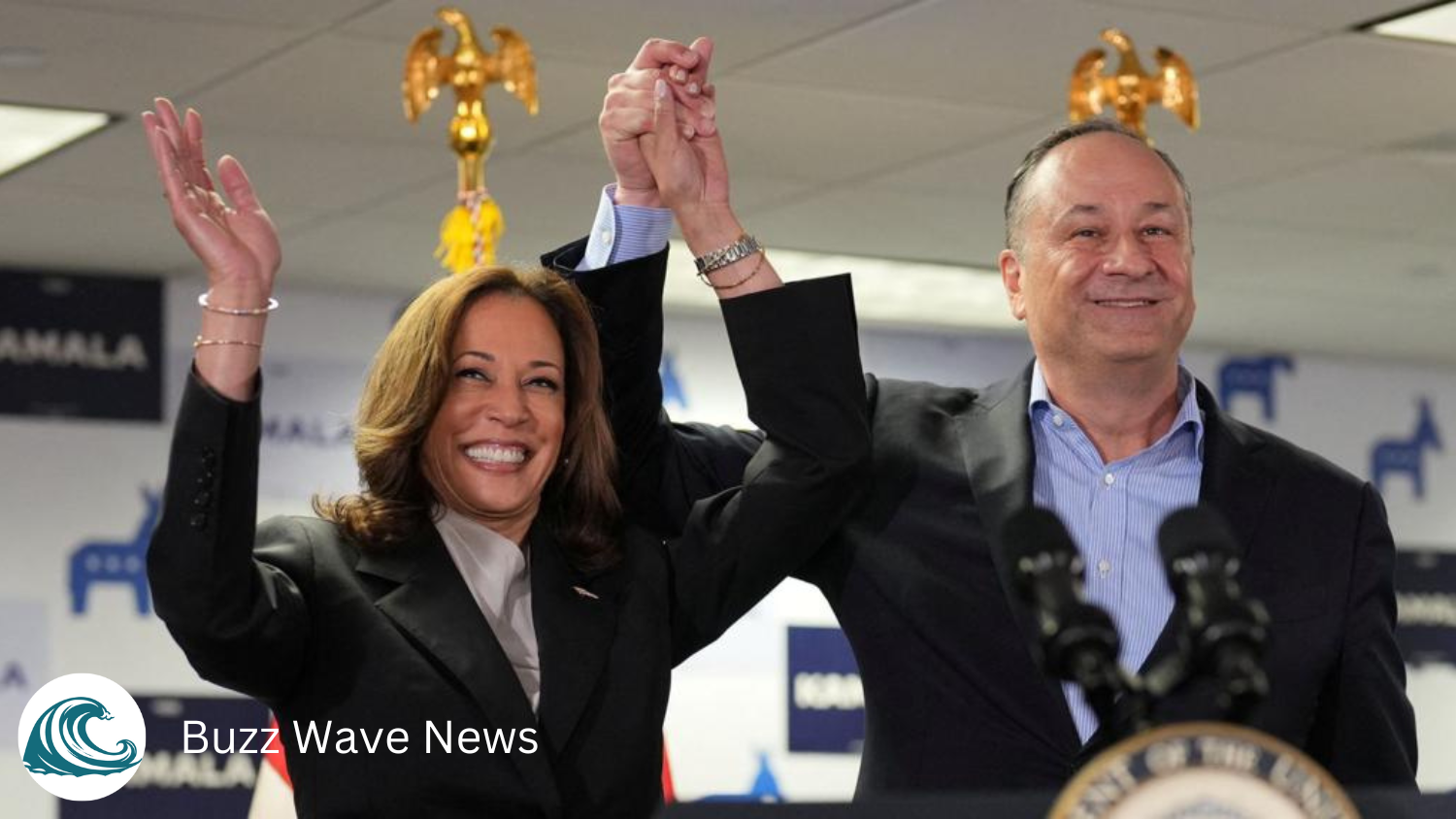

The video in question utilizes AI voice-cloning technology to create a convincing impersonation of Vice President Kamala Harris, making statements she never actually made. The fake ad closely mirrors the visuals of a real campaign ad released by Harris, who is expected to be the Democratic presidential nominee. However, the AI-generated voice-over in the fake ad contains inflammatory and misleading statements. For example, it claims Harris is running for president because President Joe Biden displayed senility at a debate and labels Harris as a “diversity hire” due to her gender and race, asserting she is unfit to run the country.

Elon Musk’s Involvement

Elon Musk shared the video on his platform X without clearly indicating it was a parody. By Sunday evening, Musk had pinned the original creator’s post to his profile and used a pun to clarify that the video was intended as satire, stating that parody is not a crime. Despite this clarification, the video had already gained significant traction, raising alarms about the potential misuse of AI in political contexts.

The Power and Risks of AI in Misinformation

The incident underscores the potent capabilities of AI technology to create realistic yet false content. The AI-generated voice in the video is strikingly similar to Harris’s real voice, making it difficult for viewers to distinguish between genuine and fabricated content. This ability to convincingly mimic public figures poses a serious threat, especially in the realm of politics where misinformation can influence public opinion and electoral outcomes.

The Ethical Concerns

The use of AI to create fake videos and audio clips raises ethical questions about the responsibilities of those who develop and distribute such technologies. There is growing concern about how these tools can be regulated to prevent their misuse without stifling innovation. The incident involving the Kamala Harris video exemplifies the urgent need for guidelines and policies to address the ethical implications of AI-generated content.

The Broader Impact on the Election

With Election Day approaching, the spread of misinformation through AI-generated content could have significant repercussions. The potential for such videos to go viral and shape voters’ perceptions makes it imperative to address these challenges promptly. Policymakers, technology companies, and society at large must collaborate to find solutions that balance technological advancements with ethical considerations and the need for accurate information.

Potential Solutions

Several potential solutions can be explored to mitigate the risks posed by AI-generated misinformation:

1. Technological Safeguards: Developing advanced algorithms and tools that can detect and flag AI-generated content, helping users identify potential misinformation.

2. Policy and Regulation: Implementing policies that require clear labeling of AI-generated content and holding creators accountable for spreading false information.

3. Public Awareness: Educating the public about the capabilities and risks of AI technology, fostering critical thinking and media literacy to help individuals discern genuine content from fake.

Conclusion

The controversy surrounding the AI-generated Kamala Harris video highlights the urgent need to address the ethical and practical challenges posed by AI in the dissemination of information. As Election Day nears, ensuring the integrity of information shared with the public is paramount. Collaboration between technology developers, policymakers, and society is crucial to harnessing the benefits of AI while mitigating its potential for harm. The incident serves as a wake-up call to the profound impact AI can have on politics and the importance of vigilance in the face of emerging technological threats.